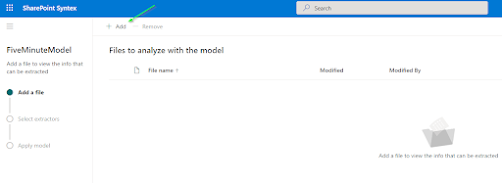

In a previous blog post we covered SharePoint Syntex for auto tagging invoices by using a content type, which can be found here SharePoint Syntex in 5 Minutes. Sometimes there needs to be more processing outside of SharePoint before the document can be uploaded or external systems must be accessed for metadata properties. This kind of functionality can become very complex when trying to use a Power App or Flow to accomplish this. Microsoft provides AI services for reading invoices that can be read and then used for the business logic that goes beyond what Syntex can do. These services are a consumption based API in Azure that allows uploading invoices for processing to return the same metadata results that can be found in SharePoint Syntex.

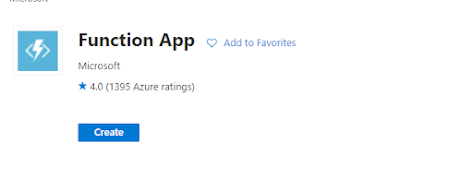

Setting up Azure

Consuming the service

Azure.AI.FormRecognizer

Azure.Storage.Blobs

System.Drawing.Common

PdfLibCore

<window height="1000" mc:ignorable="d" title="Five Minute Invoice Tagger" width="1600" x:class="FiveMintueInvoiceTagger.MainWindow" xmlns:d="http://schemas.microsoft.com/expression/blend/2008" xmlns:local="clr-namespace:FiveMintueInvoiceTagger" xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006" xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml" xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation">

<grid>

<grid.columndefinitions="">

<columndefinition width="500"></columndefinition>

<columndefinition width="1100"></columndefinition>

</grid>

<grid.rowdefinitions="">

<rowdefinition height="50"gt;</rowdefinition>

<rowdefinition height="750"gt;</rowdefinition>

<rowdefinition height="750"gt;</rowdefinition>

</grid>

<stackpanel grid.column="0" grid.row="0">

<label content="Select an invoice...">

<button click="UploadFile_Click" content="Select Invoice">

</button></label></stackpanel>

<image grid.column="0" grid.row="1" height="800" name="InvoiceImage" width="450">

<datagrid grid.column="1" grid.row="1" height="800" name="DocumentProperties" width="1050">

<label grid.column="0" grid.row="2" name="ErrorMsg">

</label></datagrid></image></grid>

</window>

public class InvoiceProperty

{

//Field name found on invoice

public string Field {get;set;}

//Field value

public string Value {get;set;}

//How confident AI is that field value is correct

public string Score {get;set;}

}

using System;

using System.Collections.Generic;

using System.IO;

using System.Threading.Tasks;

using System.Windows;

using System.Windows.Media.Imaging;

using Azure;

using Azure.AI.FormRecognizer.DocumentAnalysis;

using Azure.Storage.Blobs;

using PdfLibCore;

using PdfLibCore.Enums;

private async void UploadFile_Click(object sender, RoutedEventArgs e)

{

try

{

ErrorMsg.Content = "";

//we only want pdf invoices

Microsoft.Win32.OpenFileDialog openFileDlg = new Microsoft.Win32.OpenFileDialog();

openFileDlg.Filter = "Pdf Files|*.pdf";

// Launch OpenFileDialog by calling ShowDialog method

Nullable result = openFileDlg.ShowDialog();

// Get the selected file name and display in a TextBox.

// Load content of file in a TextBlock

if (result == true)

{

//Upload to azure blob so Azure AI can access file

string invoicePath = await UploadInvoiceForProcessing(openFileDlg.FileName);

//perform Invoice tagging

Task> invoicePropertiesTask = GetDocumentProperties(invoicePath);

//Convert PDF to image so we can view it next to properties

UpdateInvoiceImage(openFileDlg.FileName);

//Wait for Azure to return results, set it to our data grid

DocumentProperties.ItemsSource = await invoicePropertiesTask;

}

}

catch(Exception ex)

{

ErrorMsg.Content = ex.Message;

}

}

private async Task UploadInvoiceForProcessing(string FilePath)

{

string cs = "";

string fileName = System.IO.Path.GetFileName(FilePath);

Console.WriteLine("File name {0}", fileName);

//customer is the name of our blob container where we can view documents in Azure

//blobs require us to create a connection each time we want to upload a file

BlobClient blob = new BlobClient(cs, "invoice", fileName);

//Gets a file stream to upload to Azure

using(FileStream stream = File.Open(FilePath, FileMode.Open))

{

var blobInfo = await blob.UploadAsync(stream);

}

return "blob base storage url" + fileName;

}

private async Task> GetDocumentProperties(string InvoicePath)

{

List invoiceProperties = new List();

//Endpoint and key found in Azure AI service

string endpoint = "ai service url";

string key = "ai service key";

AzureKeyCredential credential = new AzureKeyCredential(key);

DocumentAnalysisClient client = new DocumentAnalysisClient(new Uri(endpoint), credential);

//create Uri for the invoice

Uri invoiceUri = new Uri(InvoicePath);

//Analyzes the invoice

AnalyzeDocumentOperation operation = await client.AnalyzeDocumentFromUriAsync(WaitUntil.Completed, "prebuilt-invoice", invoiceUri);

AnalyzeResult result = operation.Value;

//iterate the results and populates list of field values

for (int i = 0; i < result.Documents.Count; i++)

{

AnalyzedDocument document = result.Documents[i];

foreach(string field in document.Fields.Keys)

{

DocumentField documentField = document.Fields[field];

InvoiceProperty invoiceProperty = new InvoiceProperty()

{

Field = field,

Value = documentField.Content,

Score = documentField.Confidence?.ToString()

};

invoiceProperties.Add(invoiceProperty);

}

}

return invoiceProperties;

}

private void UpdateInvoiceImage(string FilePath)

{

using(var pdf = new PdfDocument(File.Open(FilePath, FileMode.Open)))

{

//for this example we only want to show the first page

if(pdf.Pages.Count > 0)

{

var pdfPage = pdf.Pages[0];

var dpiX= 600D;

var dpiY = 600D;

var pageWidth = (int) (dpiX * pdfPage.Size.Width / 72);

var pageHeight = (int) (dpiY * pdfPage.Size.Height / 72);

var bitmap = new PdfiumBitmap(pageWidth, pageHeight, true);

pdfPage.Render(bitmap, PageOrientations.Normal, RenderingFlags.LcdText);

BitmapImage image = new BitmapImage();

image.BeginInit();

image.StreamSource = bitmap.AsBmpStream(dpiX,dpiY);

image.EndInit();

InvoiceImage.Source = image;

}

}

}

%20-%20Microsoft%20Visual%20Studio.png)

%20-%20Microsoft%20Visual%20Studio.png)