What is caching?

When to use caching.

- Will caching speed up the call? Why add an extra fail point if we do not see any improvements.

- Does the data change often? If the data is constantly changing our cache will become outdated very quickly and that would remove the point of the cache.

- Is the data accessed often? Memory is expensive, why store something that no one sees.

- Do I have more than one server? This is more of a how do I cache my data question. Having more than 1 server adds complexity to the system and if you cache directly to the server it means caching of the data will be out of sync and could cause issues for users between calls.

How do I cache my data?

Creating a Redis Caching database in 5 minutes.

In this blog, we will be using an instance of Redis deployed to Azure. Setup is easy search for "Azure Cache for Redis" and select your instance size.

Azure Setup

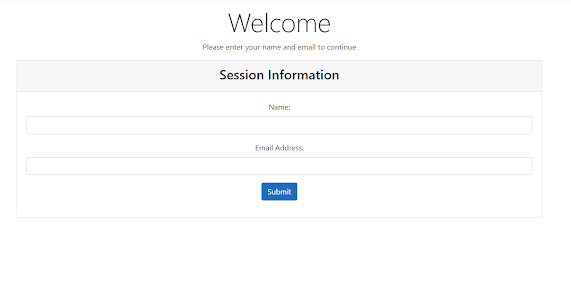

Using Redis Cache in 5 minutes

dotnet new mvc --n "RedisCacheExample"

[HttpPost, ValidateAntiForgeryToken]

public async Task Index(SessionModel Model)

{

//creates a unique session id

Guid sessionId = Guid.NewGuid();

//This is a 5 minute project so we are going to code in controller

//Create connection to Redis

using(ConnectionMultiplexer redis = ConnectionMultiplexer.Connect(""))

{

//Get database, this returns default database

var db = redis.GetDatabase();

//add session information to Redis with a 10 minute expiration time

await db.StringSetAsync(sessionId.ToString(), JsonConvert.SerializeObject(Model),TimeSpan.FromMinutes(10));

}

//Session created, now go to campaigns

return RedirectToAction("Index", "Campaigns", new { SessionId=sessionId.ToString()});

}

async Task> GetCampaigns()

{

//Replace with your sql connection string

string cs = "";

//List to hold our campaigns

List types = new List();

//connct to sql

using(SqlConnection connection = new SqlConnection(cs))

{

//Open our SQL connection

connection.Open();

//complex SQL query worthy of being cached

using(SqlCommand cmd = new SqlCommand(@"select Count(dbo.campaign_tracking.Campaign) EmailsOpened, dbo.campaigns.CampaignId, dbo.campaigns.Subject from dbo.campaign_tracking

right join dbo.campaigns on dbo.campaign_tracking.Campaign = dbo.campaigns.CampaignId

Group By dbo.campaign_tracking.Campaign, dbo.campaigns.CampaignId, dbo.campaigns.Subject", connection))

{

//execute query

SqlDataReader reader = await cmd.ExecuteReaderAsync();

while(await reader.ReadAsync())

{

//object for storing campaign information

CampaignTypes type = new CampaignTypes()

{

CampaignId = reader["CampaignId"]!= null ? reader["CampaignId"].ToString() : "invalid id",

Subject = reader["Subject"] != null ? reader["Subject"].ToString() : "Subject not found",

EmailCount = reader["EmailsOpened"] != null ? Convert.ToInt32(reader["EmailsOpened"]) : 0

};

types.Add(type);

}

}

//close connection

connection.Close();

}

//return our list of campaigns

return types;

}

public async Task Index(string Sessionid)

{

//Create a connection to Redis

using(ConnectionMultiplexer redis = ConnectionMultiplexer.Connect(""))

{

//Get Redis Database

var db = redis.GetDatabase();

//set viewmodel so they it is not null for our view

CampaignsModel campaigns = new CampaignsModel()

{

CampaignTypes = new List(),

Session = new SessionModel()

};

//Check is session id exists in redis

if(await db.KeyExistsAsync(Sessionid))

{

//session id exists in Redis check for campaign cache in redis

if(await db.KeyExistsAsync("CampaignTypes"))

{

//campaigns are cached, get data from redis

var campaignCache = await db.StringGetAsync("CampaignTypes");

campaigns.CampaignTypes = JsonConvert.DeserializeObject>(campaignCache);

}

else

{

//campaigns are not cached, get campaigns from SQL

campaigns.CampaignTypes = await GetCampaigns();

//save campaigns to Redis for future use

await db.StringSetAsync("CampaignTypes", JsonConvert.SerializeObject(campaigns.CampaignTypes), TimeSpan.FromMinutes(5));

}

//pull session information from Redis

var session = await db.StringGetAsync(Sessionid);

campaigns.Session = JsonConvert.DeserializeObject(session);

campaigns.Session.Id = Sessionid;

//A refresh of the page should extend our session open by 10 minutes

await db.KeyExpireAsync(Sessionid, TimeSpan.FromMinutes(10));

return View(campaigns);

}

else{

//session expired

return RedirectToAction("Index", "Home");

}

}

}