Previously...

We did this to get around limitations in SharePoint Online that cause throttling when trying to create large batches of document uploads. The second part will cover creating a web job that continuously checks the queue for new items and when it discovers these items the job will perform the upload to SharePoint.

**It is worth noting, that .NET 5 has recently came out and this post uses it. Unfortunately .NET standard and .NET 5 do not support cookie based authentication, username/password, anymore and require Azure AD integrations. So this solution turned from a 5 minute demo to a 10 minute demo. Mostly because of the configuration in Azure, and switching from CSOM . The code for Graph adds additional steps because you need site and list ids instead of using the name which CSOM supported. This is the way Microsoft recommends so I updated the code to reflect those changes**

Prepping SharePoint

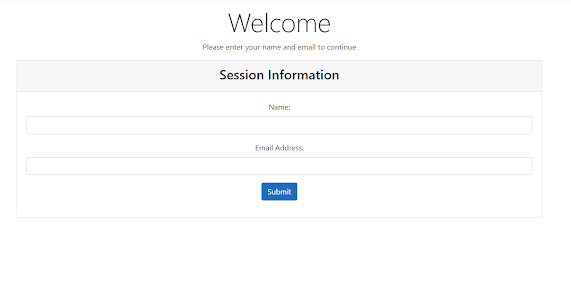

Before we can create our job we need a destination. If you do not have a SharePoint environment please sign up for a free account here:

https://developer.microsoft.com/en-us/microsoft-365/dev-program . Once you have an account, we need to create our library. To do this go to site contents -> new -> document library and create a new document library called "CustomerDocs". With our library created, we need to create our 3 columns"State, City, AccountNumber". Please make sure to use list settings to create the columns. If you use the new column button on the library home page the internal column names will not match and you will need to find them. Creating the columns in library settings our internal columns names will match what we type in. It is also important to point out to not use spaces when creating the columns. This will cause some URL encoding in the column name making it harder to develop. Once the column is created you can change the name without affecting the internal name.

Prepping Azure

Azure AD comes free with your O365 subscription, and this is where we will register our app. Login to Azure and go to Azure Active Directory. From there you will find "App Registrations". This is where we will register our new app that will upload our documents.

we will name our app something we will remember, I chose Graph API Document Upload. We will then set our supported account type. This setting will not matter for us since we will use a token to access the API, so choose "Accounts in this organizational directory only" so that it is secure. You can leave the web blank, we will set that up next.

Once your app is created note the Application ID and Directory ID in the overview section, we will need these for our app to authenticate. Moving down the left hand navigation click Authentication. If you don't have a platform, click add platform, and select web.

It will then ask for a redirect URL. We can put anything in here since our app will not be using Azure AD credentials to login, we want the token. So I just put https://localhost. What we want form our platfomr is the implicit grant token. Find the section that says Implicit grant and check the boxes for access tokens and ID tokens, and save.

Continuing down the left navigation click on Certificates and secrets. From here, click click New Client Secret. This is a one time code, if you do not capture it when creating you will need to create another one.

Finally we can give our app permissions to SharePoint. In the left navigation, go to API permissions. You should see the default permission for graph "User.Read" we will click add permission. Find Graph API.

We want Application permission and then search Sites. Expand and give Sites.ReadWrite.All. Manage all will also work if you do not want to use the preview.

Finally, we need to grant the app permission. At the top next to "Add a permission" click Grant admin consent for...

Azure is now configured for our application.

Creating a Web Job in 5 minutes

A web job is just a console application that runs on a background process of an azure web application. These jobs can be set to run in intervals or continuously. Jobs will also scale with the azure web service, giving you more instances as you scale out your web application.

Since we will be creating a web job in 5 minutes, this blog post will not go into details about configuring the job using code, we will focus on configuration using the portal instead.

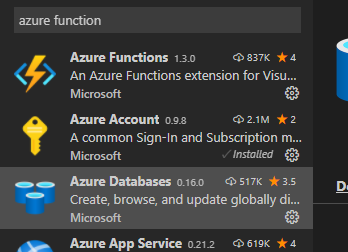

To start, we will create a new console application and name is "SharePointMIgration_WebJob". Our web job will require several nuget packages. We will use O365 Graph API to perform our document upload operations.

Packages to install:

- Microsoft.Graph.Core

- Microsoft.Graph

- Microsoft.Identity.Client

- Azure.Storage.Blobs

- Azure.Storage.Queues

Now that we have our nuget packages installed, we can start developing our web job. For the most part, our process will just be the reverse of adding items to the queue. We will need to manage the queue by popping items when finished uploading to SharePoint. We will need to bring over our CustomerMetadata model we created in the first part of this blog since it represents our queue data.

namespace SharePointMigration_WebJob

{

public class CustomerMetadata

{

public string State {get; set;}

public string City {get;set;}

public string AccountNumber {get;set;}

public string FileName {get;set;}

}

}

Now that we have our data model covered, we will want to pull our data from our queue. The Azure queue has some interesting features, we can peek or receive an item in the queue. Peek allows us to look without a locking the item, while receive will put a temporary hold on the item so other jobs cannot receive or pop the item from the queue. The queue also allows us to do batch requests, this will help with throttling the queue. Our queue function will take two parameters one for the number of messages we want to receive from the queue and how long we want to lock the queue. In our example we will want to load a document every 10 seconds, which comes to approximately 30 documents every 5 minutes. In our function the parameters will be set to the defaults of 1 message and a lock of 30 seconds. When we call the function, it will pull a larger batch.

public static async Task GetFileMetaDataFromQueue(int MessageCount=1, int QueueLock=60)

{

//calls the queue and pulls messages for processing

QueueMessage[] queueMessages = await queue.ReceiveMessagesAsync(MessageCount, TimeSpan.FromSeconds(QueueLock));

return queueMessages;

}

Now that we have our queue items, we will need to get the document that is associated with the queue. Documents are returned as streams, and the Graph API expects a stream to write to SharePoint. So we will just return the stream from our blob storage.

public static async Task GetFileFromAzureBlob(string FileName)

{

BlobClient blobClient = new BlobClient(cs, "customer", FileName);

using (BlobDownloadInfo downloadInfo = await blobClient.DownloadAsync())

{

MemoryStream stream = new MemoryStream();

downloadInfo.Content.CopyTo(stream);

return stream;

}

}

Pulling metadata and documents from Azure queue is straight forward, the Graph API is not. In older SharePoint libraries (SSOM, CSOM, JSOM) you could get sites and libraries by title. With Graph everything is ID based. That means we need to get our site, library, and folder ids before we can add/update SharePoint. These do not result in additional calls, CSOM required us to pull them first as well, but it does make it more challenging to code. Before we can get our IDs we need to setup Graph to use our new app credentials. To do this we will create a function that authenticates to Azure and returns our token.

public static async Task GetGraphAPIToken()

{

string tenantId = ""; //realm

//some service account to upload docs. Documents cannot use app

string clientId = "";

//service account password

string clientSecret = "";

string[] scopes = new string[] {"https://graph.microsoft.com/.default" };

IConfidentialClientApplication app = ConfidentialClientApplicationBuilder.Create(clientId)

.WithClientSecret(clientSecret)

.WithAuthority(new Uri("https://login.microsoftonline.com/" + tenantId))

.Build();

AuthenticationResult result = await app.AcquireTokenForClient(scopes).ExecuteAsync();

//result contains an ExpiresOn property which can be used to cache token

return result.AccessToken;

}

Now that we can pull our token, we will create a function that pulls our Ids. We only need to do this once and will call it at the beginning our our main function to reduce the amount of calls to the Graph API. The Ids are stored in fields so they can be accessed by other functions.

public static async Task GetSiteandListIDs()

{

//Crate our graph client

GraphServiceClient graphClient = new GraphServiceClient("https://graph.microsoft.com/v1.0", new DelegateAuthenticationProvider(

async(requestMessage) =>{

string token = await GetGraphAPIToken();

requestMessage.Headers.Authorization = new System.Net.Http.Headers.AuthenticationHeaderValue("Bearer", token);

}

));

//Gets the root site, if your library lives somewhere else you will need the collection and find it.

var sites = await graphClient.Sites.Root.Request().GetAsync();

siteId = sites.Id;

//gets all libraries. Since our app is written in 5 minutes it is easier to filter the entire collection

var libraries = await graphClient.Sites[siteId].Drives.Request().GetAsync();

var library = libraries.First(f => f.Name == "CustomerDocs");

libraryId = library.Id;

// gets root folder of our library

var rootFolder = await graphClient.Sites[siteId].Drives[libraryId].Root.Request().GetAsync();

rootFolderId = rootFolder.Id;

}

We are ready to start uploading documents to SharePoint. We will follow similar steps for uploading documents as we did for getting our Ids. It is important to note that O365 now refers to libraries as drives. This is because the same functionality can be applied to OneDrive and Teams, which are all backed by SharePoint libraries.

public static async Task UploadDocumentToSharePoint(Stream FileStream, CustomerMetadata Metadata)

{

//Gets graph client. We get this each time to make sure our token is not expired

GraphServiceClient graphClient = new GraphServiceClient("https://graph.microsoft.com/v1.0", new DelegateAuthenticationProvider(

async(requestMessage) =>{

string token = await GetGraphAPIToken();

requestMessage.Headers.Authorization = new System.Net.Http.Headers.AuthenticationHeaderValue("Bearer", token);

}

));

//Uploads our file to our library

DriveItem createDocument = await graphClient.Sites[siteId].Drives[libraryId].Items[rootFolderId].ItemWithPath(Metadata.FileName).Content.Request().PutAsync(FileStream);

//Our metadata for our document

FieldValueSet custData = new FieldValueSet{

AdditionalData = new Dictionary()

{

{"State", Metadata.State},

{"City", Metadata.City},

{"AccountNumber", Metadata.AccountNumber}

}

};

//sets the metada properites for our item

await graphClient.Sites[siteId].Drives[libraryId].Items[rootFolderId].ItemWithPath(Metadata.FileName).ListItem.Fields.Request().UpdateAsync(custData);

//try checking in file, some libraries it is required

try

{

await graphClient.Sites[siteId].Drives[libraryId].Items[createDocument.Id].Checkin().Request().PostAsync();

}

catch(Exception ex){

//ignoring this error becuase library is not set for checkin/out

if(!ex.Message.Contains("The file is not checked out"))

{

throw ex;

}

}

}

Our documents now sit in SharePoint with metadata. The last thing to do is clean up our queues. Remember Microsoft charges an average amount that is in our blob storage, so keeping it with minimal documents as possible will keep costs down. We will use our PopReceipt and MessageId to remove any queue items. We dont want them back in the queue looking for items again, that will break our loop.

public static async Task RemoveItemFromQueue(string MessageId, string Receipt)

{

await queue.DeleteMessageAsync(MessageId, Receipt);

}

public static async Task RemoveDocumentFromQueue(string FileName)

{

BlobClient blobClient = new BlobClient(cs, "customer", FileName);

await blobClient.DeleteAsync();

}

Now that we have all our functions we can put our main loop together. This is a continuous job, so all of our logic will sit in a while loop to keep it running forever. We will get our Ids from the Graph API, and then pull from the queue. Once we have the queue we loop through our items and upload to SharePoint. We will sleep every once and awhile to make sure our app runs at an appropriate speed for SharePoint and eliminate any throttling.

//making this a property so we do not have to keep recreating the object

static QueueClient queue;

//connection string to our storage account, it is shared between blobs and queues.

static string cs = "";

//The site ID our library lives in

static string siteId = "";

//The ID of the library

static string libraryId = "";

//The ID of the root folder in the library

static string rootFolderId = "";

static async Task Main(string[] args)

{

queue = new QueueClient(cs, "customer");

int batchMessageCount = 30; //number of items to pull from queue at once

int queueLock = batchMessageCount * 10; //number of batches time 10 since each message will take 10 seconds to process.

//

await GetSiteandListIDs();

//Creating an infinite loop for our continuous job

while(true)

{

DateTime startTime = DateTime.Now;

try

{

Console.WriteLine("Getting queue");

QueueMessage[] messages = await GetFileMetaDataFromQueue(batchMessageCount, queueLock);

Console.WriteLine("Found {0} items in the queue", messages.Length);

foreach(QueueMessage message in messages)

{

//our cleint job encoded the message, this will decode it

string data = HttpUtility.UrlDecode(message.MessageText);

CustomerMetadata customer = JsonConvert.DeserializeObject(data);

Console.WriteLine("Pulling document {0}", customer.FileName);

using(MemoryStream document = await GetFileFromAzureBlob(customer.FileName))

{

Console.WriteLine("Uploading document {0}", customer.FileName);

await UploadDocumentToSharePoint(document, customer);

}

Console.WriteLine("Upload was successful, removing {0} from the queue", customer.FileName);

//remove message from queue so it doesnt get pulled again since we were successful

await RemoveItemFromQueue(message.MessageId, message.PopReceipt);

//remove document from storage

await RemoveDocumentFromQueue(customer.FileName);

//sleep 10 seconds before next call to sharepoint to prevent throttling.

System.Threading.Thread.Sleep(10000);

}

}

catch(Exception ex)

{

Console.WriteLine("Error writing queue to SharePoint: " + ex.Message);

}

Console.WriteLine("Finished with current queue list, will wait 5 minutes from last call");

//we want our job to sleep if it takes less than 5 minutes to process the queue. This is to prevent throttling

DateTime endTime = DateTime.Now;

double totalMinutes = endTime.Subtract(startTime).TotalMinutes;

if(totalMinutes < 5)

{

double sleepTime = (5-totalMinutes) * 60000;

System.Threading.Thread.Sleep(Convert.ToInt32(sleepTime));

}

}

}

Deploying our Web Job

With our web job complete we need to publish it to Azure. To do do this, we just need to create a release and zip the contents. To create a realease, run the command below:

dotnet publish -c Release

Next we need to add our web job to our Azure web app. If you do not have a web app in Azure you can create one for free. One thing to note, is a web job runs on a schedule so for this demo a free account will work to test our job. A web job will not run in a production environment unless your app is set to be "always on". Which is not allowed in a free version.

Once your application is created, find the web jobs section under setting in the left navigation.

Select "Add" at the top and enter a friendly name; I chose SPSync. Next select the file to upload. From the upload screen go to where you published the .NET project, which should be the bin folder of your web job project if you followed this tutorial. In the bin folder you will see a release folder. Click in and depending on your dotnet core version you should see another file, mine says ".net 5.0.Zip". Zip this file up and upload the zip.

Lastly, let us set the job to continuous so it will continuously check our queue for new documents.

Once our job publishes, you will see its status changed to running. Click into the logs to review that items are loading. Go back to your queue and the documents will be gone!

Azure, SharePoint, O365, SP, C#, Storage, Storage Account, Blob Storage, Azure Blob, Azure Blob Storage, Azure Storage Account, Azure Blob Storage Account, Azure Queue, Azure Queue Account, Queue, Serverless development, devleopment c sharp, 429, throttling, SharePoint Online, Office 365